AI AMONGST US

Beyond the inflection point, we are living the skyrocketing curve. The next frontier? Interactive interfaces that provide contextual understanding of AI decision-making.

The Trend

Feels like air these days, it’s everywhere! Artificial intelligence (AI) wake us up, tells us where we’ve been, optimizes our drive, runs our business tasks, reminds us about our wellness, and even suggests us to seek advice. As industries radically benefit from process optimization and consumers demand more “intelligent” things, before we know it, AI is becoming part of just about every product and service we buy. And like the yin yang, concerns about AI’s implications are rising. From the future of work and society, to fears about the impact of automation on everything that moves or thinks, enabling users to trust the intelligent agents may pave the road for better human-machine partnerships.

The Impact

Machine learning (ML) algorithms are complex and can generate mistrust and disbelief. And even if AI processes could be explained, one of the challenges, it seems, is that most people can simply not relate to them. We like to point out when AI gets it wrong, or we believe that it is wrong because we don’t agree with it, even tough humans sometimes reason in irrational ways.

A framework shift in the way we build machine-learning artifacts maybe a way to produce more understandable AI outcomes. Recently, DARPA I2O released “Explainable Artificial Intelligence” (XAI) to support research on this topic with the ultimate goal of producing human machine interactions that are “natural” and allow for a seamless translation of deep neural network decisions into visualizations and even sentences.

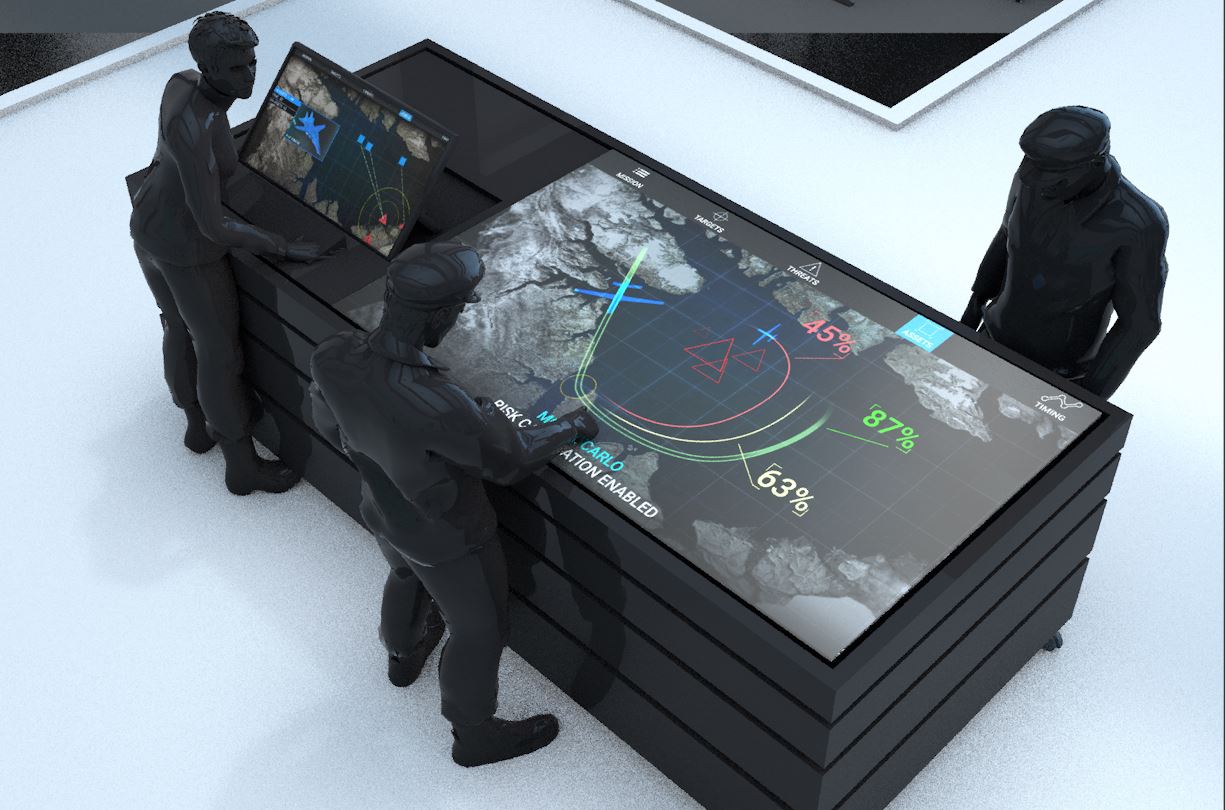

Another way to create better symbiotic relationships between humans and AI is by allowing contribution and outcome control. While most people don’t need to understand all of the complexity of AI systems, when given end users the possibility of influence the decision and control how they are implemented, the trust level can significantly rise. A recent project at TheInc proved just that. A client commissioned a route planning AI powered prototype to allow for joint mission planning. When the first set of users where exposed to it, they immediate asked why and how the decisions where made and how the level of risk could be considered acceptable. The next iteration allowed for users to modify sets of information provided to the AI engine, which it was re-clustered in real time and allowed the AI to provide a “user modified route.” The research showed that while most users opted to support the AI provided analysis, there were found ways to compare the process from human perspective to the AI perspective and derive conclusions about why the AI provided those recommendations.

Explanatory interfaces will permit users to interact with the intelligent agents and improve trust while also allowing the AI to learn from human experience. And, in turn, humans will be likely more satisfied with the AI outcome/resolutions and be able to understand AI in very natural ways.